My projects

- All

- Harland Clarke Digital

- Trading Technologies

- CBOE

- Nextech

- Personal

I've been programming since I was 12 years old. I've taught myself countless technologies and completed numerous personal projects. I'm passionate about bringing that background to the workplace. I live to learn and grow.

During my career I've founded two SQA departments. In both cases I defined and implemented the SDLC, STLC, branching models, and CI/CD processes for those development teams. At HCD I won an award for my efforts around this as well as for authoring a markup language. At CBOE I was responsible for validating highly critical data for the worlds largest and oldest options exchange in addition to many other critical components.

Life is also about balance. I have three amazing kids and believe in a work/life balance. When we're strong at home, we're strong at work. I embrace people where they're at and do my best to help those around me to grow all while being humble enough to learn from others.

Did two summers of an IT support internship at CME group. Received high praise for completing several hardware refresh projects and handling technical support tickets.

Founded the SQA department, defined the development and testing processes, managed product releases, and created testing infrastructure. Received the Pillar of Excellence Award, awarded to 1% of the company and signed off by the CEO.

Restored a Python and Selenium POM based test framework for testing a drag and drop GUI for defining trading algos.

Founded the SQA department for the Livevol team and created/maintained their devops infrastructure. Defined SQA practices and created several test frameworks for REST APIs, data integrity, and multicast message normalization. Created the ansible based deployment framework. Created CI/CD pipelines in jenkins that were integrated into a newly defined weekly release process.

Transformed the quality engineering department over the course of a year. Created three Typescript Playwright frameworks sharing the same common code to test frontend applications. Page Objects and API helper methods were generated using AI. Maintained three legacy Selenium C# frameworks. Oversaw 12 SDETs including contractors. Created a K6 based performance test framework from scratch.

Attended WIU focusing on computer science and philosophy.

Wrote an addon that played World of Warcraft. Automated a priest class character to always make the optimal healing decisions.

Launched an online business where artists could host their tshirt designs for a profit. Eventually zeroing in on the pop culture gifting market.

Completed a javascript based video game as a gift for my kids. It took ~3000 hours to complete and taught science and philosophy.

Currently a few years into a stock AI trading project. Data is stored in MongoDB and the project is written in Java. Trading logic evolves each generation.

Doing research on a new project that connects to chatGPT and modifies it's code at runtime using reflection. The software takes XML based spec updates and reaches out to chatGPT's API to modify itself in real time. The package is intended to be used as an import to make all my software projects easier to write and maintain.

My first day on the job I noticed you could execute an XSS exploit and gain vertical and horizontal access throughout the system. Eventually this resulted in us executing a full security audit of the system. I led this project using BURP scanner which uncovered over 2000 security related bugs in the system. Manual security testing revealed another 500 bugs in the system. These include XSS, SQL Injection, authentication issues, vertical/horizontal privilege escalation, cross-site forgery, clickjacking, session hijacking, and more. I oversaw the prioritization and validation of bug fixes on this project. Eventually we were able to resolve all major issues.

I was the creator and maintainer of a keyword driven Java based Selenium test framework. This was in the early days of Selenium frameworks, prior to POM/Cucumber standardization. This framework was particularly effective, greatly reducing our manual regression testing efforts. I was also able to innovate a solution for validating file downloads that is still more effective to this day than any other industry standard which relies on robot libraries.

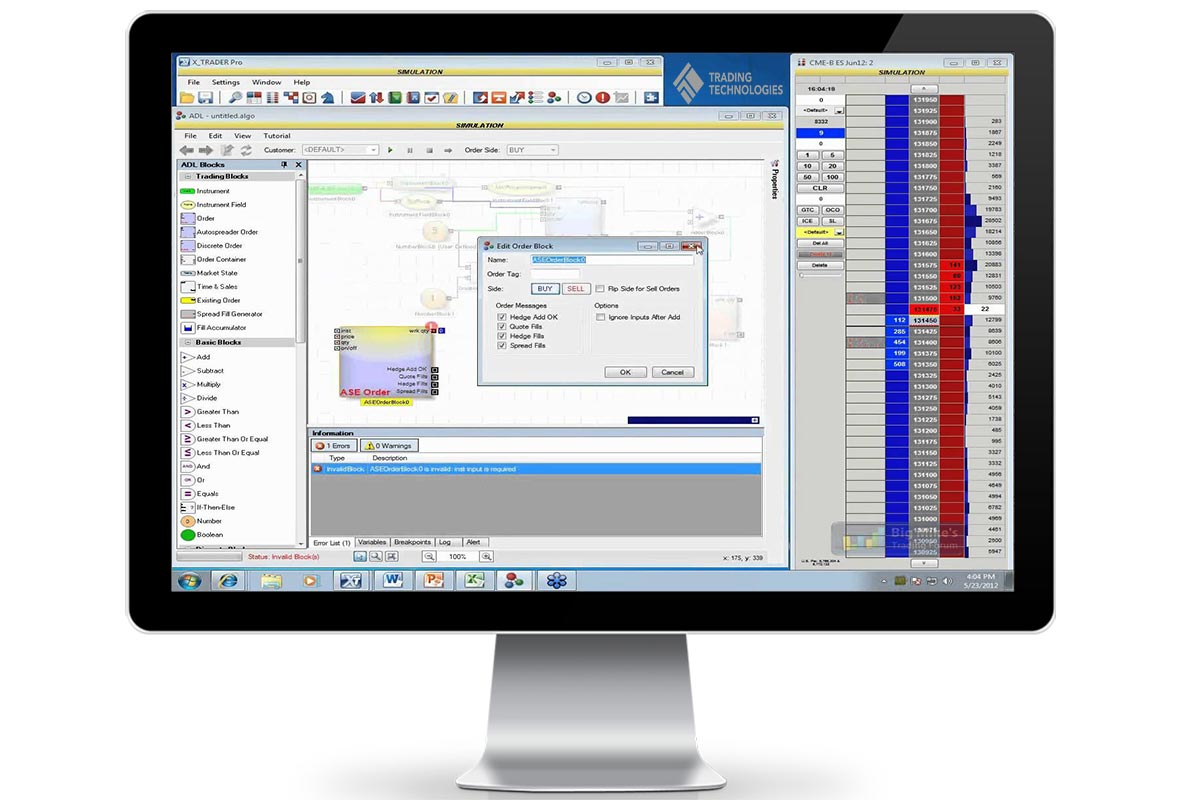

While working at Trading Technologies I restored a python based Selenium framework back to working order. This framework had hundreds of failing testcases upon arrival. The Algo Design Lab was a drag and drop SPA for defining trading algos. The restoration of the framework allowed regression testing to be reintroduced into software development process for a critical application.

The SubscriberMail (Harland Clarke Digital) email marketing platform had a Java based REST API that allowed you to manage your email campaigns. I authored a Java based regression/smoke testing framework for testing this API. I also mentored a teammate on writing testcases for this framework. This allowed us to roll out regular updates with rapid testing feedback.

Prior to SubscriberMail migrating to a REST based API, they had a SOAP based API for managing email campaigns. I authored a Java based framework for regression testing this API. It had 100% regression coverage and was successful at keep the API stable.

Authoring marketing emails is complicated even for advanced HTML professionals. Different email clients (e.g. gmail, outlook, mobile) all render emails differently. They support different tags and functionality. This made authoring universally pleasing emails to be extremely challenging. To resolve this challenge I authored a markup language called SMML. The tags in this language represented containers and structures within the email. These tags converted into HTML that was known to function well in all clients. We would render live previews of emails on the shadow dom as they were being authored.

Validating file downloads in Selenium can be challenging. The primary reason is that the file download prompt is an OS level prompt rather than being something the browser can interact with. The industry has struggled with this, often resorting to robot library based solutions, which can be unreliable.

To resolve this issue, I innovated a solution that is still more reliable than any other industry solution. When clicking on a download button, the HTTP response message typically has a 'content-disposition' header in it. This is the header that tells a browser to open an OS level download prompt. My solution intercepts this response using a Java based proxy library. Intercepting the response, I remove this header, thus preventing the OS prompt.

Additionally, the body of the HTTP response contains all of the file data. From the proxy intercept we can convert this data into a byte array and have the file in memory. At this point you can load it into PDFBox or Apache POI and perform any validations you like. This is a quick, fast, reliable solution that is better than any other industry solution.

While at SubscriberMail (Harland Clarke Digital), I was tasked with managing production deployment automation. I gathered details from developers and system administrators on deployment steps and turned this into a bash script. This worked well and greatly improved our ability to reliably deploy.

After some time, we decided to improve upon this further and turned the bash scripts into ansible scripts. I again led the initiative on this project and produced a dependable ansible based solution. This allowed us greater control of the configuration management. I would later take this knowledge and create an even more elegant ansible based solution while working at CBOE.

Demo accounts at SubscriberMail were treated as it's own feature. Custom pages were created just for demo reasons. As the software evolved, these demo pages also needed to be updated. It was tedious and less than ideal.

Instead of using custom demo pages, instead I created the ability for us to feed demo data into accounts designated as demo accounts. This allowed us to retire old demo pages with developers no longer needing to worry about this.

The demo application ran on a hosted server and simulated real account activity so that the demo account always displayed up to date data. Using monte carlo simulation we were able generate realistic looking data trends in the reports.

Trading Technologies ADL (Algo Design Lab) needs to withstand high stress loads placed on it by algos. This interface is backed by an API that required extensive stress testing to ensure continued stability. I produced a python based stress testing framework with threading and ramp up capabilities. The report included charts using plotly to display the stress ramp up timeline and show when issues started to arise.

Our clients were able to manage their email marketing campaigns through our API. This included the creation of new email campaigns, adding recipients to mailing lists, campaign statistics, and much more. To ensure the stability of this API I created a complete stress testing suite in JMeter. Every method was covered with stress tests and extensively tested. Several API methods were found that broke after moderate amounts of stress were placed on them. Through the bug resolution cycle we were able to patch these issues and deliver a high performance API.

I was hired at CBOE to launch both an SQA department as well implement all of the devops for a team of engineers. The first priority was setting up deployment automation to set the team on a route towards a CI/CD process with weekly releases. There were approximately 15 different projects to deploy to 5 different environments which represented hundreds of different servers, each with their own unique configuration settings. These projects were each completed in various languages including C#, Java, Python, C++, and NodeJS.

To accomplish this, I created an ansible project capable of building and deploying each of these to all environments including production. This project was designed with core code that all projects could make use of for their deployments. The core code included functionality like file transferring, service starting/stopping, file compression/decompression, server information gathering, and much much more.

Each project would plug into this core functionality with it's own build definition. The build defintion defined build commands, where binaries would compile to, and target server file structures to name a few possibilities.

Configurations for each target server were managed in git which allowed developers to update them at will and differently on each branch if required.

This project had many sophisticated enhancements including binary caching in Artifactory, config only deploys, self test mode, pre-deploys, symlink based version switching, backup management, tagging, and others.

The ansible project also had it's own CI/CD pipeline where is self tested itself. The project had a monitoring page that gave advanced metrics on performance and stability. Hundreds of deploys were successfully conducted on a weekly basis and very quickly.

In addition to authoring CBOE's ansible project, I also authored their CI/CD pipelines in Jenkins Blue Ocean using groovy Jenkinsfiles. These scripts were triggered by pull requests. When a developer would want to merge a feature branch into the dev branch these scripts would execute. The scripts would build the project and deploy it to the test environment (using ansible) and then launch the appropriate test framework against it. The results would be reported back to bitbucket and let the developer know if their code was stable enough to merge.

CBOE worked with massive amounts of historical data in an environment where it needed to process millions of multicast messages per second. This is why they produced their own MySQL based db engine with performance enhancements. Updating one table could potentially mean several other downstream tables were altered.

To test this, I created a framework that would feed data into the database and monitor where updates took place. This allowed us to create testcases where we could map the expected end state of the database after each datapoint is passed into it. This framework generated hundreds of testcases and allowed us to safely complete updates and migrations with that db engine.

The one test framework CBOE had in place upon hiring me was a Selenium POM framework written in Java to test a NodeJS SPA(single-page application). Maintaining and extending this framework became my responsibility and in my time there we updated it consistently as the application evolved and demanded additional testcases. This framework ran on Docker/Selenoid and could execute 10 testcases in parallel for improved performance. We successfully integrated this with our CI/CD process.

While at CBOE we had to create a Selenium POM/Cucumber framework to test their "Datashop" application based on NopCommerce. For this project I mentored two senior SDETs, reviewed their work, provided feedback/improvements, and helped bring it to completion. This framework also ran on Docker/Selenoid. We successfully integrated it into our CI/CD process which allowed for weekly deploys.

Each exchange sends multicast messages in a different format with different values. To handle this, our system normalizes these messages into a consistent format. To test this system I created a test framework where you could define multicast messages in a json format, it would send these into the system as a multicast message, and then validate that the normalized message is as expected. We created thousands of testcases for this framework allowing us to validate that our system continued to be able to support incoming messages of all types.

One of our development teams was responsible for processes historical data orders, including subscription based recurring fulfillments, that were delivered to AWS buckets regularly. There were hundreds of different potential data types and file formats that could be delivered. Additionally, these files could depend on various data sources.

For this project I closely mentored another senior SDET on the creation of a framework that could handle the validation of a wide range of possibilities with a simplified and easily maintainable validation system.

We were able to define source types, source definitions, output types, and expected output values. Carefully crafting that in a flexible way supported the validation of a massively complex system.

As part of a technology migration project, we had to test that our system could still handle millions of multicast messages per second. To test this, we had to create a framework capable of sending various multicast messages at extremely high rates and determine that they were all being processed. We managed to send massive amounts of data quickly and validate the performance of the system.

We had many systems that worked with the creation and processing of flat CSV files. We created a project called the DLV that could validate the format of these CSVs as well as the integrity of the files. For each file type you could create a definition defining which columns the file should have and the type/ranges of data each column should have. The framework even had conditional logic based on other column values.

This framework could validate a million lines of data in seconds and was integrated into various parts of system for ongoing monitoring. The core components of this system were also written in a universal way such that they were also integrated into other validation and test frameworks we created.

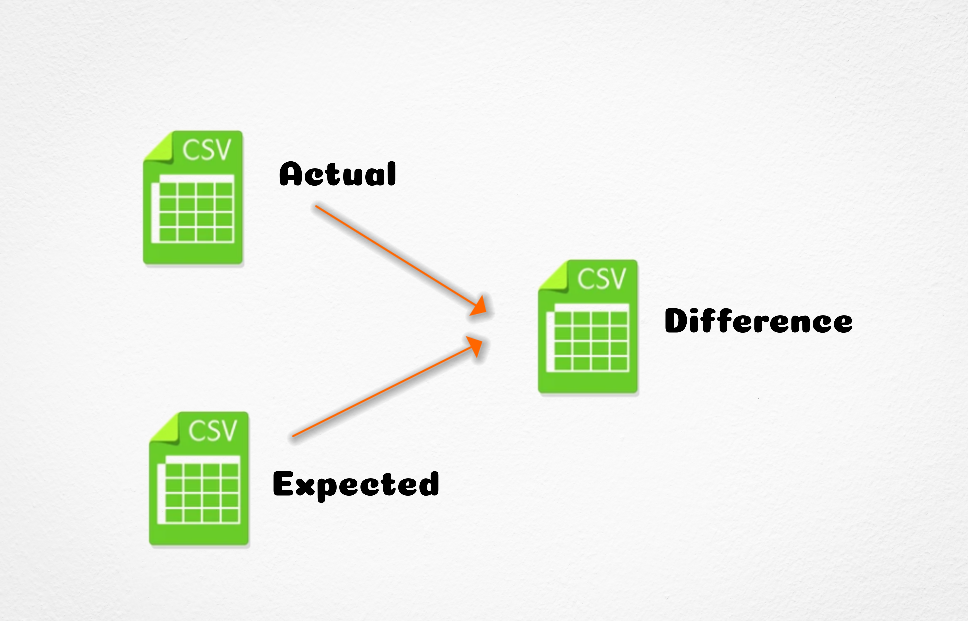

CSV Comparison? That sounds simple!

What may seem like a trivial task on the surface, actually turns out to be an extremely interesting and challenging problem that will take you deep into computer science theory and challenges your knowledge of programming principles.

In an ordinary case, comparing line-by-line would be simple. But what if the data is 100,000,000 lines and it's all unsorted? That is too large to load into memory. Even sorting this much data for comparison means going to the disk repeatedly. Additionally, consider that the data in each column may be unnormalized, perhaps in different time formats or decimal precisions.

These are exactly the types of challenges I took on. Performance was also critical when we were migrating decades of data. The parameters of the comparison could greatly impact the algorithm that would be best suited for the comparison. Do the lines match up? Is it completely unsorted? Is it mostly sorted (multicast messages arriving in slightly different orders)? Does it need to be normalized? How much information is needed in the report? Should we try to match based on keys or just compare full lines? How much reporting data should we or can we accumulate in memory?

Whew! With all this complexity this project must have been a mess! Nope!

By making use of Java's string pool memory feature we were able to greatly reduce the required memory. We implemented a disk based third party library that would merge sort data, but only when required in extreme cases. This was all managed using a strategy pattern that determined the best algo for a given comparison. Various reporting features could be enabled and disabled depending on if it was used as part of a batch process or if a human was doing the comparison. Using the adapter pattern, we could attach normalizing steps to a given column's data. We also used threading to have one thread read in from disk into concurrent data structures while a separate thread did comparisons, this limited the amount of data in memory at any given point.

If this wasn't enough, it could also connect to aws sources to retrieve CSVs and handle batch comparison jobs that printed reports for each comparison. Using this we were able to successfully complete multiple migration projects with extreme clarity and peace of mind. In in many cases we prevented serious issues.

One of my favorite projects of all time was creating a Javascript based video game that taught my kids science and philosophy during the pandemic. It took a few thousand hours to create and was revealed through the kids completing a treasure hunt to find the game hidden nearby.

While in college I wrote a decision tree based AI in LUA that allowed the priest class to always make the optimal healing decision. This AI became incredibly proficient at keeping groups alive while using the least amount of mana and high cooldown abilities possible unless necessary.

I'm currently working on a Java based stock AI investing project. The project connects to ploygon.io APIs to retrieve data and stores it in MongoDB on an Ubuntu machine. MongoDB runs Atlas locally for data analysis. The AI itself uses neural networks as well as some evolutionary elements.

I was the creator and owner of a website called CulturedTees that allowed artists to submit designs that we sold on the site. Initially, we allowed a variety of designs and eventually focused in on the pop culture t-shirt gifting market. We had a presence on reddit gift exchange as well as Amazon. We also had a separate site for processing bulk custom orders. I maintained the website, relationships with artists, budgeting, marketing, collaborated with a professional photographer, and much more. The website slowly grew in popularity until I decided to abandon it to say 'yes' to more important things in my life.

Use Azure AppsInsights I was able to pull all the information on the API endpoints we host and the parameters they take. I exported this information to a spreadsheet and then created a helper utility that transformed this into a complete Postman sample request repository. This respository consisted of 1500+ endpoints and was created in less than a week.

Created a K6 performance test framework from scratch. This framework was capable of smoke, stress, spike, soak, breakpoint testing. It tested both single endpoints and workflows. Benchmarks were produced from Azure AppsInsights data research that I completed. Request parameters used distributions of data that matched production usage. I generated databases of three different sizes to target it against to detect scalability issues. API helper methods were generated for all endpoints in one day using AI. We were working towards complete simulation.

Created a Javascript/Typescript based Playwright framework from scratch. The framework used very well defined patterns for it's ui-models (Page Objects) and it's api helper methods. This allowed us to programmatically generate all these classes so they didn't need to be hand coded. The testcases themselves were written by test engineers. There were three versions of this framework that shared the same common code and patterns. We scaled to hundreds of testcases within months.

Creating test data was critically important to us and an existing pain point. I created a database scanning utility that scanned our existing MS SQL based databases. It recorded all relevant metrics on the type and shape of the data. It recorded datatypes, min, max, and average values. It recorded how often values were null. It identified reference tables and saved them. It also recorded the distribution of data for each column using Kernel Density Analysis, later converted to Cubic Spline Density Analysis for simplicity. This allowed us to generate realistic databases of any size on demand that we used for performance testing.